机器学习第二章笔记

/ / 点击 / 阅读耗时 5 分钟第二章

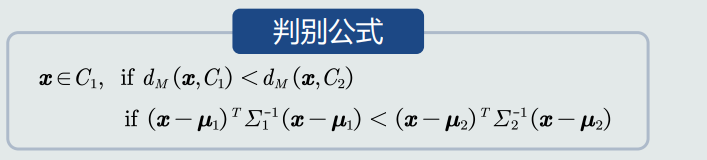

1.MED分类器

均值是对类中所有训练样本代表误差最小的一种表达方式

MED分类器采用欧式距离作为距离度量,没有考虑特征变化的不同及特征之间的相关性

1.对角线元素不相等:每维特征的变化不同

2.非对角元素不为0:特征之间存在相关性

代码

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57def MED(dataset, is_2d=None):

# 实现MED分类器

train = dataset['train']

test = dataset['test']

target = dataset['target']

train_pos_mean_2d = None

train_neg_mean_2d = None

# 画2d图

if is_2d == True:

scaler = pre.StandardScaler()

pca = PCA(n_components=2)

train_2d = copy.deepcopy(train)

label = train_2d[:, -1]

train_2d = scaler.fit_transform(train_2d[:, :4])

train_2d = pca.fit_transform(train_2d)

train_2d = np.c_[train_2d, label]

train_pos = np.array(train_2d[train[:, -1] == target[0]])

train_neg = np.array(train_2d[train[:, -1] == target[1]])

train_pos_mean_2d = np.mean(train_pos[:, :2], axis=0)

train_neg_mean_2d = np.mean(train_neg[:, :2], axis=0)

# target[0]为正样本,target[1]为负样本

train_pos = np.array(train[train[:, -1] == target[0]])

train_neg = np.array(train[train[:, -1] == target[1]])

print('positive num:', train_pos.shape)

print('negative num:', train_neg.shape)

# 分别取平均

train_pos_mean = np.mean(train_pos[:, :4], axis=0)

train_neg_mean = np.mean(train_neg[:, :4], axis=0)

x_test = test[:, :4]

y_test = test[:, -1].flatten()

y_pre = np.array([])

for i in range(x_test.shape[0]):

# 欧氏距离

dis_pos = (x_test[i] - train_pos_mean).T @ (x_test[i] - train_pos_mean)

dis_neg = (x_test[i] - train_neg_mean).T @ (x_test[i] - train_neg_mean)

# 预测

if dis_pos < dis_neg:

y_pre = np.append(y_pre, target[0])

else:

y_pre = np.append(y_pre, target[1])

# 准确率

acc = (y_pre == y_test.astype(int)).sum() / y_test.shape[0]

print(acc)

if is_2d == True:

return train_pos_mean_2d, train_neg_mean_2d

else:

return y_test, y_pre

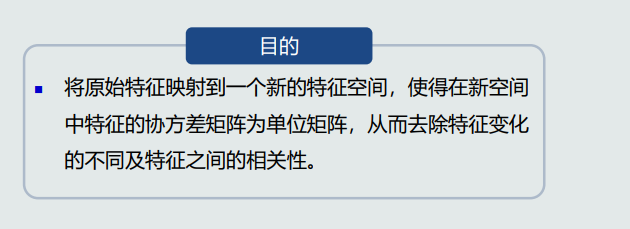

2.特征白化

目的

步骤

1.解耦,去除特征之间相关性,即使对角元素为0,矩阵对角化

2.白化,对特征进行尺度变化,使所有特征具有相同的方差

原始特征投影到协方差矩阵对应的特征向量上,其中每一个特征向量构成一个坐标轴

转换前后欧氏距离保持一致,W1只起到旋转作用

代码

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34def whitening(dataset):

# 进行特征白化

data = dataset.data

print('data shape:', data.shape)

# 计算协方差矩阵

cov = np.cov(data, rowvar=False)

print('cov shape:', cov.shape)

# 特征值,特征向量

w, v = np.linalg.eig(cov)

print('w shape:', w.shape)

print('v shape:', v.shape)

# 单位化特征向量

sum_list = []

for i in range(v.shape[0]):

sum = 0.

for j in range(v.shape[1]):

sum += v[i][j] * v[i][j]

sum_list.append(math.sqrt(sum))

for i in range(v.shape[0]):

for j in range(v.shape[1]):

v[i][j] /= sum_list[i]

# 计算W

W1 = v.T

print('W1 shape:', W1.shape)

W2 = np.diag(w ** (-0.5))

print('W2 shape:', W2.shape)

W = W2 @ W1

print('W shape:', W.shape)

data = data @ W

print('data shape', data.shape)

return data

3.MICD分类器

代码有空再补